One of the new shopping discovery elements that Google is testing is a “Shop the Look” feature. This feature allows users to explore and purchase products directly from images. When a user sees a picture of a room with furniture or an outfit with various clothing items, they can simply click on the items they are interested in and be directed to a page where they can buy those exact products. This feature not only makes it easier for users to find and purchase the products they see in images, but it also provides a seamless shopping experience without the need to search for each individual item.

In addition to the “Shop the Look” feature, Google is also experimenting with a “Discover Similar Products” option. This feature aims to help users find alternative products that are similar to the ones they are currently viewing or interested in. For example, if a user is looking at a specific pair of shoes, Google’s algorithm will analyze the product and suggest other similar styles or brands that the user might be interested in. This not only expands the user’s options but also allows them to discover new products that they may not have considered before.

Furthermore, Google is testing a feature called “Product Recommendations.” This feature uses machine learning algorithms to analyze a user’s browsing and purchasing history to provide personalized product recommendations. By understanding the user’s preferences and past interactions, Google can suggest products that are tailored to their individual tastes. This feature not only saves users time by presenting them with products they are likely to be interested in, but it also enhances the overall shopping experience by providing a more personalized and curated selection.

Overall, these new shopping discovery elements being tested by Google have the potential to transform the way we find and purchase products online. By enabling users to shop directly from images, discover similar products, and receive personalized recommendations, Google is making the online shopping experience more intuitive, efficient, and enjoyable. As these features continue to evolve and improve, users can expect an even more seamless and personalized shopping experience in the future.

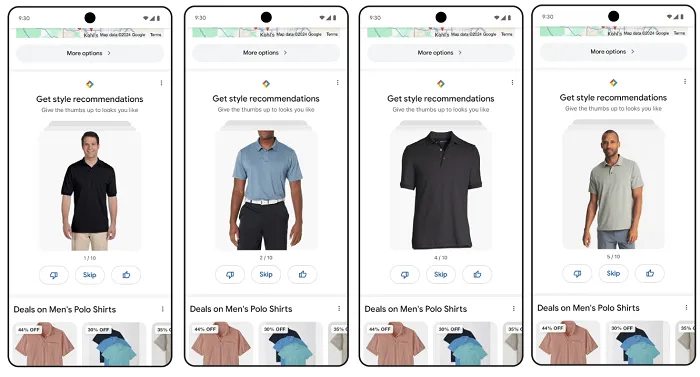

Related Style Recommendations

One of the new features that Google is testing is related style recommendations. When you search for a product, Google will now display a gallery of images that are related to your search. This will allow you to explore different styles and options that are similar to what you are looking for.

What sets this feature apart is that Google is using artificial intelligence to generate these recommendations. The AI technology analyzes your search query and matches it with relevant images, taking into consideration factors such as color, style, and design. This means that the recommendations are tailored to your specific preferences and tastes.

But it doesn’t stop there. Google is also giving users the ability to refine their search results based on these style recommendations. You can upvote or downvote images, or swipe right or left on a series of related product images. This feedback helps Google understand your preferences even better and further personalize your shopping experience.

For example, let’s say you are searching for a new sofa for your living room. When you enter your search query, Google’s AI technology will analyze the keywords and provide you with a curated gallery of sofa images that match your search criteria. These images will showcase different styles, colors, and designs, allowing you to explore various options and find the perfect sofa for your space.

As you browse through the gallery, you can upvote or downvote images based on your preferences. This feedback will help Google’s AI algorithms understand your style preferences even better. If you upvote images with a specific color or design, Google will take note of that and prioritize similar options in future recommendations.

Additionally, you can swipe right or left on a series of related product images to indicate whether you like or dislike a particular style. This gesture-based interaction allows for a seamless and intuitive browsing experience. By swiping right on images that catch your eye, you are signaling to Google that you are interested in that particular style. On the other hand, swiping left indicates that the style is not to your liking.

Google’s AI technology takes all this feedback into account and continuously refines its recommendations based on your preferences. This means that the more you interact with the related style recommendations feature, the more personalized your shopping experience becomes.

In addition to refining your search results, Google’s related style recommendations also provide inspiration and ideas. By showcasing a variety of styles and designs, this feature helps you discover new trends and options that you may not have considered before. It opens up a world of possibilities and makes the shopping experience more enjoyable and exciting.

Body shape matching is just one of the many innovative features that Google is working on to enhance the online shopping experience. With the advancement of technology and the increasing popularity of e-commerce, it has become more important than ever to find ways to bridge the gap between the virtual world and the physical reality of trying on clothes.

Traditionally, shopping for clothes online has been a hit or miss experience. You browse through countless websites, trying to find the perfect item, only to be disappointed when it arrives and doesn’t fit quite right. This frustration often leads to returns, which not only waste time but also create additional costs for both the consumer and the retailer.

However, with the introduction of body shape matching, Google is aiming to eliminate these challenges. By analyzing your body type and comparing it to the dimensions and proportions of different clothing items, Google can provide personalized recommendations that are more likely to flatter your figure. This means that you can confidently make purchases knowing that the clothes are not only stylish but also tailored to your specific body shape.

The technology behind body shape matching is complex and relies on advanced algorithms and machine learning. Google’s AI system is trained on vast amounts of data, including measurements of different body types and the corresponding fit of various clothing items. This allows the system to make accurate predictions and suggest the best-fitting options for each individual.

Imagine a world where you no longer have to worry about ordering clothes online and hoping for the best. With body shape matching, you can have the confidence that the clothes you choose will fit you perfectly, saving you the hassle of returns and exchanges. This feature not only enhances the convenience of online shopping but also empowers individuals to make more informed decisions when it comes to their personal style.

Furthermore, body shape matching has the potential to revolutionize the fashion industry as a whole. By providing consumers with personalized recommendations, it can help reduce the environmental impact of the fashion industry by minimizing the number of returns and ultimately reducing waste. Additionally, it can contribute to a more inclusive and diverse representation of body types in the fashion world, as the technology takes into account a wide range of body shapes and sizes.

In conclusion, Google’s body shape matching feature is an exciting development that has the potential to transform the way we shop for clothes online. By leveraging the power of AI and machine learning, it addresses the challenges of finding clothes that fit well and flatter our figures. With this innovation, shopping for clothes online becomes a more enjoyable and personalized experience, saving us time, money, and frustration.

Moreover, generative AI examples can also be utilized in the field of fashion. Companies like Zara and H&M are leveraging this technology to create virtual models that showcase their clothing lines. These virtual models have realistic features and can be dressed in various outfits, allowing customers to see how the clothes would look on a person without the need for physical models or mannequins.

Additionally, generative AI examples can be used in the automotive industry. Car manufacturers are using this technology to generate 3D models of their vehicles, enabling customers to visualize different options and configurations. By inputting specific parameters such as color, trim, and accessories, customers can see a realistic representation of their desired car before making a purchase.

Furthermore, generative AI examples have applications in the field of architecture and interior design. Designers can use this technology to generate virtual renderings of buildings and spaces, allowing clients to visualize different design concepts. By manipulating parameters such as layout, materials, and lighting, designers can create multiple variations of a project, helping clients make informed decisions.

Overall, generative AI examples have the potential to revolutionize various industries by providing unique and personalized experiences. Whether it is in product search, fashion, automotive, or architecture, this technology opens up new possibilities for creativity, exploration, and decision-making. As AI continues to advance, we can expect to see even more innovative applications in the future.

Impact on Product Discovery and Retailers

These new shopping elements being tested by Google have the potential to significantly impact how people find products in the app and how retailers display their products.

By providing related style recommendations and generative AI examples, Google is making it easier for users to discover new products and explore different options. This can lead to increased sales for retailers, as users are more likely to find products that align with their preferences and tastes.

Additionally, the body shape matching feature can help boost customer satisfaction and reduce returns. By recommending products that are likely to fit well, Google is addressing a common pain point in online shopping and improving the overall experience for users.

Moreover, these new shopping elements have the potential to level the playing field for retailers of all sizes. In the past, smaller retailers may have struggled to compete with larger, more established brands that had greater visibility and resources. However, with Google’s new features, smaller retailers can now have a chance to showcase their products to a wider audience. The related style recommendations and generative AI examples can help these smaller retailers gain exposure and attract customers who may not have discovered their products otherwise.

Furthermore, these new shopping elements can also benefit retailers by providing valuable insights and data. With the use of AI and machine learning, Google can analyze user behavior and preferences, allowing retailers to better understand their target audience. This data can help retailers make informed decisions about their product offerings, pricing strategies, and marketing campaigns. By leveraging these insights, retailers can optimize their online presence and increase their chances of success in the competitive e-commerce landscape.

Overall, these new shopping elements being tested by Google are a testament to their commitment to innovation and improving the user experience. With the power of AI and machine learning, Google is revolutionizing the way we discover and shop for products, making it easier and more personalized than ever before. These advancements not only benefit users by providing a seamless and tailored shopping experience but also empower retailers to reach a broader audience and make data-driven decisions for their businesses.